Are you looking at how to protect your content from duplication or being copied?

With the rise of content scrapers, rewriting tools, AI content generators, and simple, old-fashioned copy-paste, protecting your content from duplicating is more challenging than ever.

With millions of live pages and thousands more being made daily, it is easy for anyone to get your content. But, finding out who copied your content and how to protect it becomes that much harder because of this.

To add to this, you don’t have to just worry about others copying your content. There is a real possibility of duplicate or similar pages and posts on your site, which can affect your Search Engine Optimization (SEO).

In this article, we will look at the best ways to identify if your website content is stolen and how you can protect it. We will also look at the best ways to avoid duplicating content on your own site.

But first, let us look at why it is important to protect your content from duplication by others.

Why You Should Protect Your Content From Duplication

Even before getting into the legal issues that copying content brings, there are still issues of morality and ethics.

For one, creators spend time, resources, and skill creating content. It is unfair for someone else to come in and just take that content. Once stolen, it is hard for the original content creator to get the full recognition they deserve.

This could also lead to these creators losing money and even more opportunities in the future.

Here are other reasons you should protect your content from being copied by other sites.

- It is hard for Google to separate duplicated content from the original page. As a result, their content may end up ranking higher than yours.

- Your site visitors won’t know who the authority on the content is if they see it on multiple websites. This can lead to distrust or simply abandoning all the websites they saw the content on.

- There is the risk of your message being changed or twisted to suit the website owners who stole your content. This can mislead readers.

- It may affect your traffic as Google may send some of it to your duplicated content.

- There is a risk that Google will think you stole the content and penalize you.

To add to these, in some cases, content copying can lead to legal action. Copyright laws protect original works. If someone violates these laws, they may face legal action, including fines and settlements.

How to Spot if Your Content Has Been Duplicated

To protect your content, you must put yourself in a position to know that it has been stolen in the first place. Content protection is a situation that requires you to take charge. You can use the methods listed below to get started.

1. Set Up Google Alerts

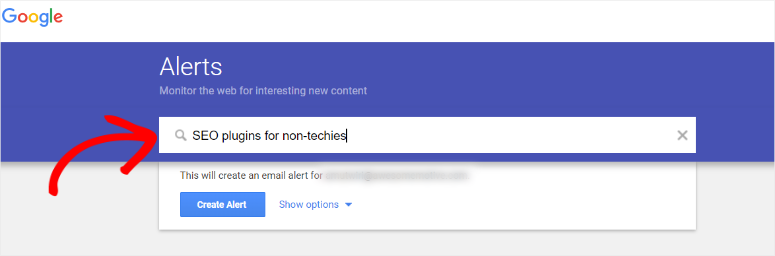

Setting up Google alerts is a great way to know when competitors post new content about a subject. But it can also be used to know when your content has been posted online by someone else.

When people copy content, they usually do not put much effort into changing it. They will just lift it as it is and add it to their site.

For this reason, you can customize Google alerts for specific, uncommon keywords not heavily used by your competitors. This way, you only get Google alerts for fewer but particular posts.

Let us explain:

For example, your content is about “SEO WordPress plugins.” If you put a Google alert for this key phrase, you may get an overwhelming number of Google alerts every day. This is because it is a topic heavily written on by many.

But even if you write about this topic and it is your primary focus, you can add noncompetitive keywords relating to the subject, like, let’s say, “SEO plugins for non-techies.”

Even though this is a keyword relating to the subject, it is not heavily used. This means that if you set up a Google alert targeting “SEO plugins for non-techies,” you will get fewer notifications. When you do, they are sure to raise your eyebrows to see if they copied your content.

2. Use Plagiarism Checkers

Another dependable way to check if your text content has been copied is by passing it through a plagiarism checker. This is a quick way to find all the sites that have copied even small sections of your content.

Here are two easy-to-use tools you can start with.

- Grammarly: Even though Grammarly is primarily a grammar checker, the premium version is great at plagiarism checking. This means you can check for plagiarism and improve your writing at the same time.

- Copyscape: Copyscape is a simple tool you can use by pasting your content in the text box. It will search online, find any content matching yours, and list all of them for you. Once you click the links provided, it will display the percentage of copied content they have duplicated. This tool will also highlight the copied content in yellow so you can easily find it on the page.

Even though the tools mentioned above are premium, there are plenty of plagiarism checkers with free versions out there you can use to help you check for plagiarism. Check out this article on Grammarly vs. Hemingway vs. Jetpack to help you choose the best spell checker.

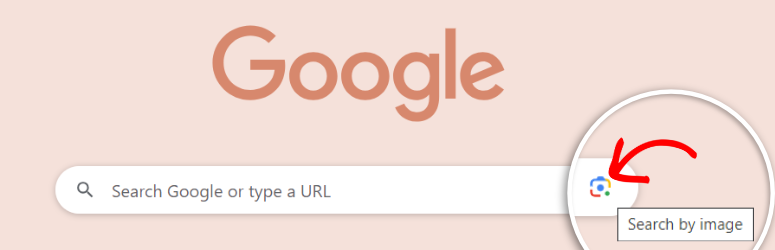

3. Reverse Image Search

It is not just written content that is commonly stolen; images are actually more widely duplicated because all someone has to do is download them. However, even though it is very easy for your images to be stolen, it is much harder than text to identify if they have.

The best way to spot if your image has been stolen is by doing a reverse search with Google or Bing. Reverse search technology has improved today, and finding similar photos to yours is much easier.

But, unless it is a very specific photo, it may not be easy to prove that it was actually copied. To help you with this, you can do the following:

- If your images have a watermark, check to see if you can see it or if you can notice if they cropped it out.

- Check your traffic analytics to see if the site with your images recently visited your WordPress website around the time they uploaded the image.

- Check to see if the size and file format of the image match yours.

Now that we have looked at ways to identify if your content has been stolen let us look at how to protect it.

Before we look at protecting your content from duplication by others, something beyond your control, let’s first look at how to avoid duplicating your own content, something you can control.

How To Avoid Having Duplicate Content on Your Own Website

One of the easiest ways to find out if you have internally duplicated content is by copying a few sentences of your most unique content and pasting them on Google. If you get multiple results from your site, it is more than likely you have duplicated content.

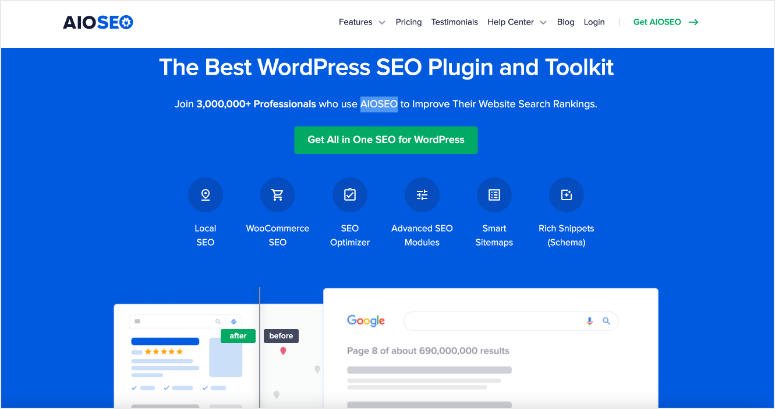

The good news is you can quickly solve content duplication on your site with AIOSEO.

This is the most powerful plugin to set up Canonical URLs and redirects, straight from your WordPress dashboard to help you solve duplicated content.

It is also the best WordPress SEO plugin to help you edit robots.txt files and noindex pages. It tells Google crawlers not to index the selected pages, making it a great way to solve content duplication. You can do all this with AIOSEO without any coding experience or using Google Search Console. This makes it great for beginners and seasoned bloggers alike.

Let’s discuss the various ways you can duplicate content on your site and how the All in One SEO plugin can help you.

1. Similar Titles

One of the easiest ways to duplicate your content is by having posts with similar titles.

While this may not affect your rankings too much because titles account for a small percentage of your content, it is still important to avoid this issue. You need to ensure you take advantage of every SEO element to keep up and outdo your competitors, and this is one of them.

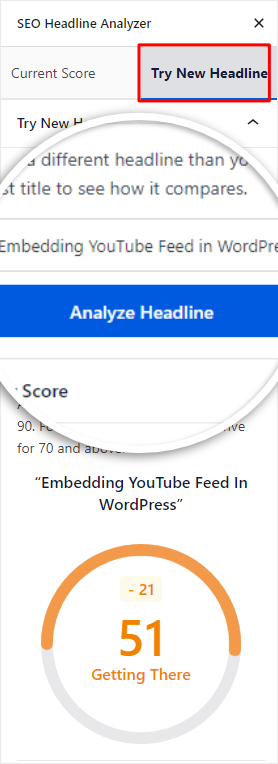

AIOSEO comes with a Headline Analyzer. This is a feature that helps you improve your title for both Google and your readers.

It helps you come up with the best title length, ensures you add important keywords, and helps you set the right tone for the article. You can also type in an alternative headline. The plugin will analyze it to see if it is a better option for the post.

AIOSEO also offers dynamic tags that you can use in your titles. This can help you generate relevant titles that are automatically updated based on the content.

All these tie together, helping you not only create a title that increases your click-through rate but also reduces the chances of title duplication.

2. Similar Topics

It is not uncommon for sites to create various pieces of content on the same topic. But sometimes, these topics are so similar that it does not make sense to have these pages existing together on the same site.

What ends up happening is that these pages compete against each other since they have similar keywords and content. This can reduce your overall site traffic and the authority of all these pages.

To help with this, you can combine all the relevant content on one page and delete the rest, so you have one authoritative post.

Once you delete the other posts, Google may still send traffic to them. To aid with this, you should add a 301 redirect telling Google that the original post has been deleted, and redirected to the new URL.

AIOSEO offers the easiest way to create 301 redirects.

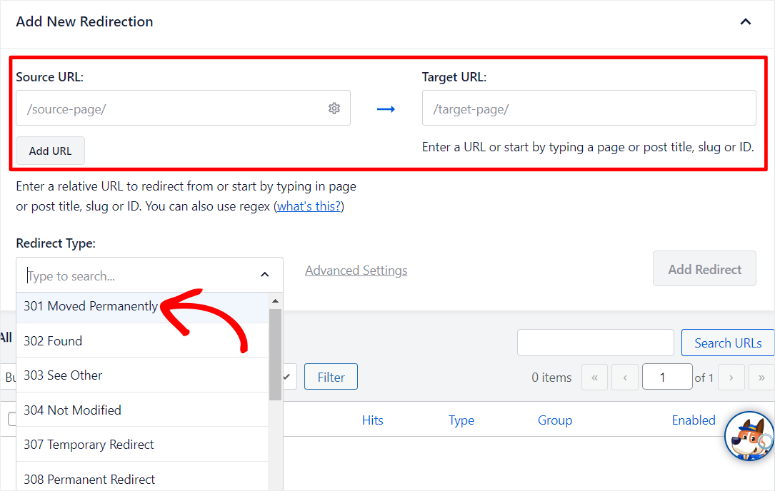

When you go to the Redirects menu after installing and activating AIOSEO, you will see the “Source URL” bar. Here, you will place the deleted post’s URL. Then, next to it, you will see the “Target URL” bar. Here, you will paste the post URL you have left after deleting similar posts on the topic.

Next, select the type of redirect you want to use, from the “Redirects Type” dropdown. In this case, you will go with 301 redirects.

Since there are many types of redirects, making it hard to differentiate them quickly, AIOSEO, gives a short description of what each type of redirect does.

3. Categories and Tags

Another way you can easily duplicate content on your site is through tags and categories.

Categories and tags are used to organize your site and structure your content. This makes it easier for your audience and search engines to find it. But, they serve different purposes and can lead to duplicated content in unique ways.

Let’s start with categories.

Categories are used to organize your content using a hierarchy system. For example, take these two category examples, “Apples” and “Oranges.” Under these categories, you can talk about recipes, benefits, varieties, and everything else under the topics.

When you write about something that talks about both apples and oranges like “the best fruits to stay healthy” you may end up placing the article in both categories. As a result, you will have two URLs to the same post.

- https://mysite.com/apples/the-best-fruits-to-stay-healthy

- https://mysite.com/oranges/the-best-fruits-to-stay-healthy

From the above examples, only the category changes in the URL parameters, the post remains the same.

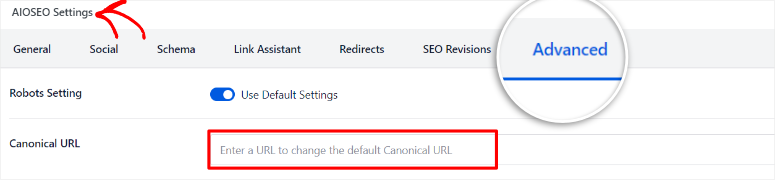

In this case, we can use AIOSEO to create Canonical URLs.

This ensures Google does not get confused with different URLs leading to the same content. A Canonical URL tells Google which is your preferred URL so that it can use it for search results without thinking you have duplicate content.

You do not need any coding experience to create Canonical URLs with AIOSEO.

With AIOSEO installed go to your post editor or add a new post. On the post editor, scroll down to AIOSEO “Settings,” and then go to Advanced. Find the Canonical URL bar and insert the URL that you want Google to use in search engine results. And you are done.

Another way to control what pages appear on search engine results, helping with content duplication is by how you set up your robots.txt file.

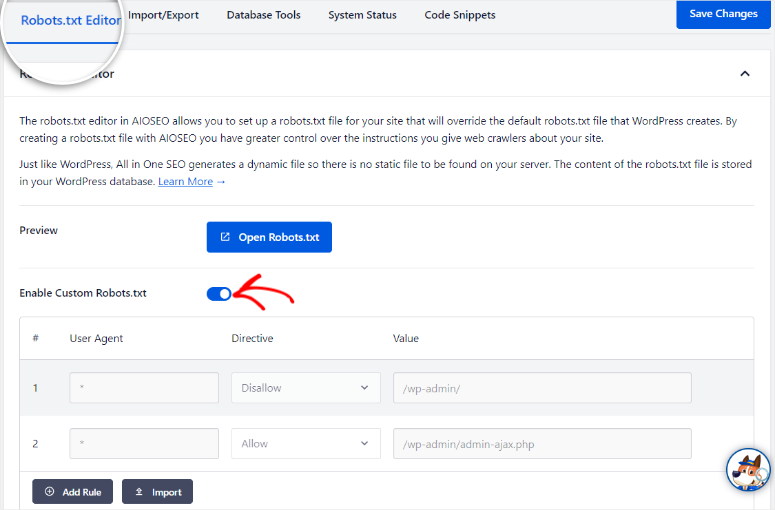

AIOSEO allows you to override the default static robots.txt file from WordPress and helps you set up a dynamic custom one. Through this, you can tell Google bots not to crawl or index your duplicated pages without deleting any content on your site.

To do this, go to the All in One SEO menu in your WordPress dashboard. You will find the Robots.txt file under the Tools submenu. To create a custom robots.txt file, enable a custom robots.txt toggle switch and add the relevant fields below it.

Enter the “User Agent” and set “Disallow” under Directive for the page you do not want Google crawlers to see. Finally, enter the page URL under “Value” to tell AIOSEO which page you do not want to be ranked.

By default, WordPress creates category archive pages. These are pages that show all the content in a similar category in one place. Since it can contain a lot of information, your archive category pages can outrank your actual content.

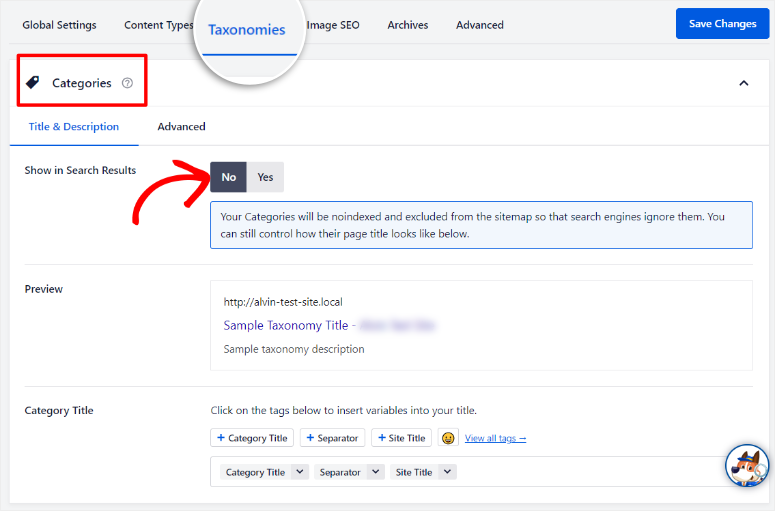

To avoid this, you can use AIOSEO.

Simply go to the AIOSEO “Search Appearance” menu and switch off category archive pages from showing up in SERPs with the toggle button.

You do not need to do anything else, it is that easy.

Let’s look at tags next.

Like categories, tags are used to structure your content but in a more specific way.

For example, the category may be “dogs,” but a tag may be “protein treats.” WordPress also creates tag archive pages for similar content, which can also rank just like the category archive pages.

The good news is, that you can sort out tag content duplications with AIOSEO in a similar way to how you would with categories. You will just follow the same steps you took to deal with category content duplications.

You can use Canonical URLs to tell Google not to rank tag achieve pages. With this plugin, you can also simply switch off tag archive pages from showing up in search results the same way you did for categories.

4. Trailing slash

Another overlooked detail that often leads to duplicated pages is the trailing slash.

Some URLs may end with a slash while others do not, confusing Google on what URL you prefer. Since both these URL structures are acceptable, it makes it even more challenging for Google to decide which to add to search results.

For example, You may find URLs from the same site that end with a slash like https://mysite.com/page/, or ones without a slash at the end like https://mysite.com/page.

To solve this with AIOSEO is also very straightforward.

You will need to implement Canonical URL as demonstrated above to tell Google which URL you prefer. You can also delete one of the pages and add a 301 redirect with AIOSEO to redirect your visitors to the one left.

Now that we have looked at how to avoid content duplication on your site, let us see how you can protect your content from duplication by others.

How to Protect Your Content from Duplication by Other Sites

1. Use Excerpts in RSS Feed

RSS feeds are a great low-budget way to get your content out there.

But RSS feeds are one of the easiest places content scrapers are used to steal content. To ensure you still take full advantage of RSS feeds, use content excerpts for RSS feeds instead of the entire content piece.

First, you should make sure your excerpts are informative and well-written to attract readers on RSS feed sites.

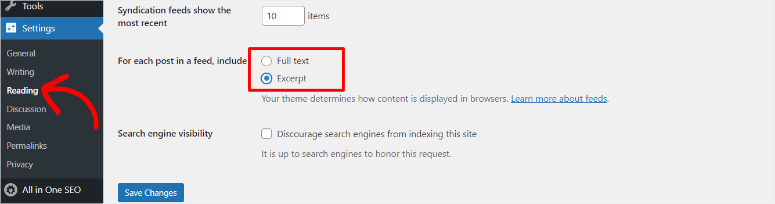

Next, set up your RSS feed in WordPress to show only excerpts of the posts.

To do this, go to Settings » Reading in your WordPress dashboard, and scroll down to the section that says, “For each post in a feed, include.” By default, it will be set to “Full text,” so check the ” Excerpt ” box instead.

Next, Save Changes. Now, your content will display excerpts in RSS feeds instead of the entire post.

2. Copyright Your Site

In most places in the world, content is automatically copyrighted and protected by law as soon as it is created. ButsSuch rules are hard to enforce.

You need to clearly state that your site is copyrighted to remind everyone and scare off anyone who would want to copy your content.

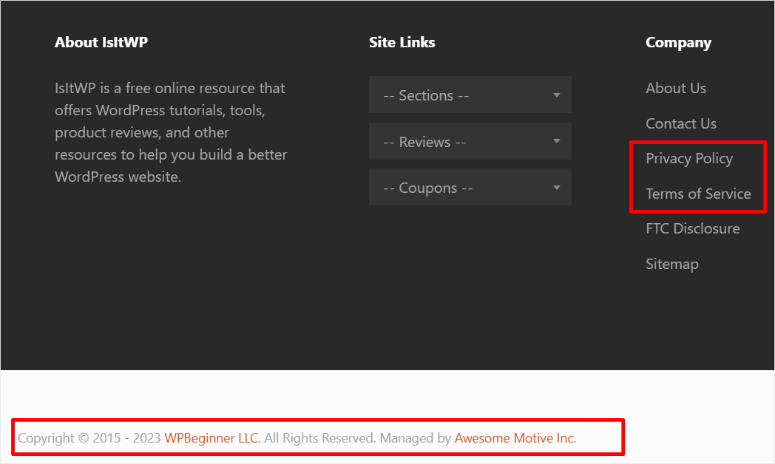

The easiest way to copyright your content is by adding your site information at the bottom as a footer, as most sites do.

It is also important to add Terms and Conditions and Privacy Policy pages to your WordPress website, which Include information on how users can and cannot use your content.

These pages help your site look more professional, scaring off anyone who wants to copy your content. Most importantly, these pages can provide you with legal grounds in case of any duplicate content issues.

For example, if you scroll to the bottom of every page on IsitWP.com, you will get links to the Privacy Policy and Terms of Service pages. You will also notice a line of text at the very bottom of the webpage, disclosing copyright reserved.

3. Disable Right Clicking

One of the best ways to protect your content from being copied is by turning off right-clicking. Right-clicking allows users to copy text or download images easily and is one of the quickest ways to duplicate content.

You can manually insert HTML code into your site that disables right-clicking. There are also plenty of WordPress plugins that can help you disable written text such as Disable Right Click For WP a free plugin you can install.

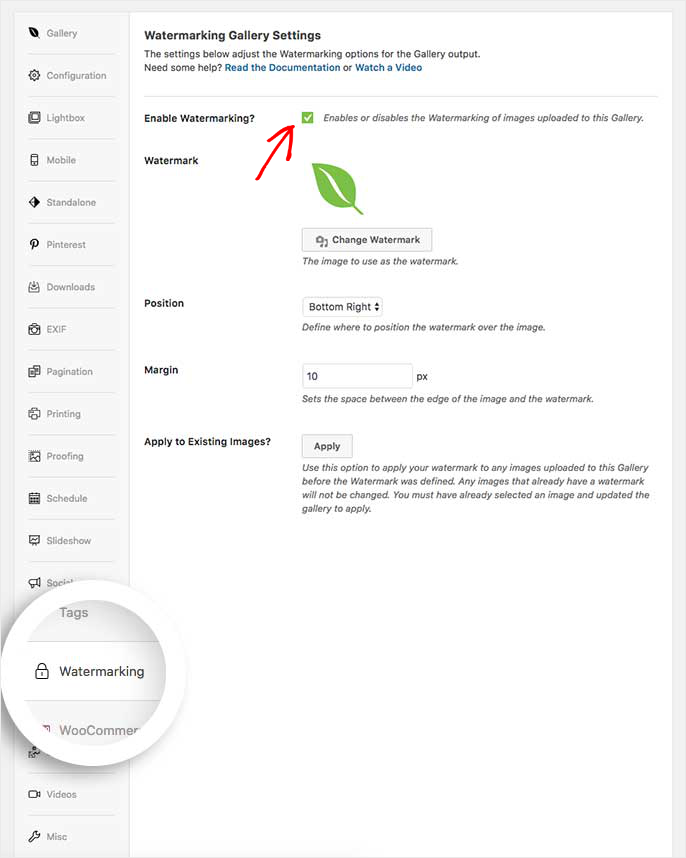

On the other hand, you can use Envira Gallery to protect your images from right-clicking.

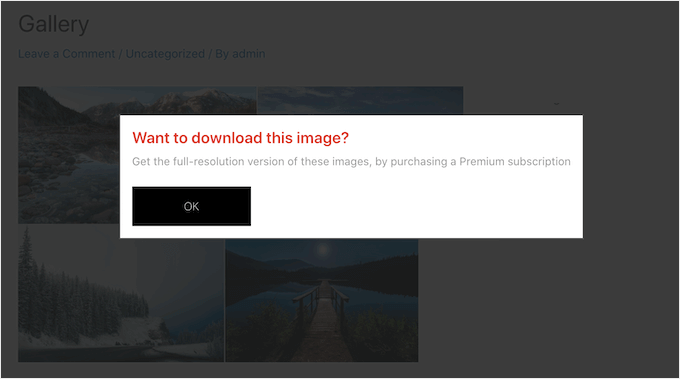

Envira Gallery is the best WordPress gallery plugin to protect your creativity and monetize it. It offers a Protection addon that allows you to disable right-clicking on image galleries.

After installing and activating Envira Gallery, you will need to enable the Protection addon. After you create a new gallery, enable image protection in the settings. This prevents visitors from right-clicking on images within the gallery.

You can also set up a popup alert with a custom message about how they can get your images legally and ethically for users attempting to right-click. This feature is particularly useful for protecting images for a photography portfolio site or product galleries for eCommerce stores. This is because it improves content security and prevents unauthorized downloads.

However, it is important to point out that disabling right-clicking may reduce the quality of your site’s user experience. Your site visitors expect to have certain privileges that allow them to use your site more freely. This includes right-clicking, copying your text, and so on.

To help with this, you can add a contact form from WPForms, allowing your visitors to quickly contact you about using your images or content. You can also add Live Chat to help instantly communicate with your readers in real-time.

All the same, disallowing right-clicking is still an effective way to protect your content from duplication.

4. Watermarking Images & Videos

Watermarking your videos and images is one of the most effective ways to protect your content.

It does not only protect your content but helps with branding, as people will quickly spot where the content is from. To add to this, it is less likely that people will copy your content in the first place if they see a watermark on them.

A great option to help wtih content watermarking is Envira through its addon.

The Envira watermarking addon is the best way to protect your images as it does not affect the image performance, quality or size. It is so secure that once your watermarked image is live, even you cannot remove the watermark. You will have to delete and reupload a new image,

It is easy to use as all you have to do is enable the watermarking checkbox after installing the addon. Plus, you can choose the exact position and margin you want to place the watermark.

Envira gives you the flexibility to add your logo, copyright symbols, and create text based watermarks to ensure your content still looks great. While at the same time the sharp and clear watermark Envira creates will help with brand identity

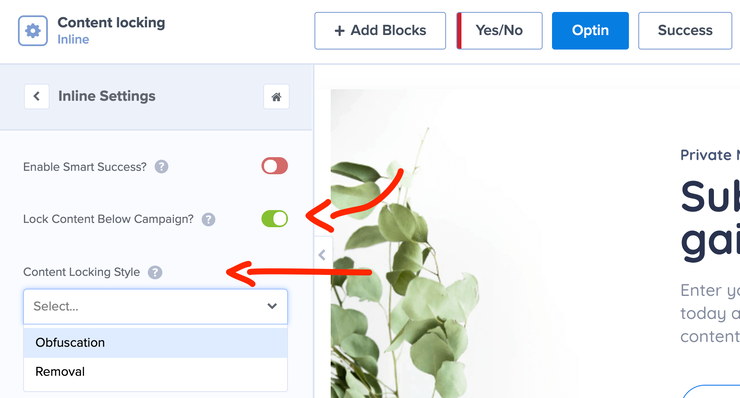

5. Content Lock

Content locking is one of the best strategies to help with content duplication by other sites.

It helps you restrict access to specific parts of their content, requiring users to register, share, or even pay to unlock full access. This discourages unauthorized duplication and allows you to maintain better control over who accesses your content.

It also adds an additional layer of protection against content scraping or copying, making it more challenging for other websites to copy your entire post.

To help you with this, you can try OptinMonster, the best content lock plugin.

It offers different content lock styles so you can choose one that best fits your audience. For example you can go with Obfuscation content lock style, which adds a professional looking blur to your content.

This ensures your visitors still remain interested in your content, while protecting it from anyone who would like to steal it. Other content lock styles from OptinMonster include, Removal, Blur, Downgrade, Upside Down, Scale, and Highlight.

With OptinMonster, content locking is primarily used to help grow engagement and boost leads and conversions. But since it is such a dynamic marketing tool, its content lock feature is also the best at protecting your content.

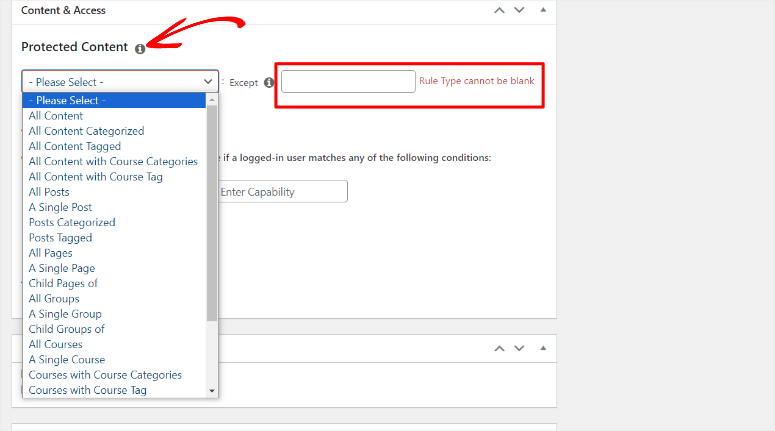

MemberPress is also a great plugin to help you lock and protect your content. You can also customize how you lock your content to suit your audience and business model or build FOMO around your site.

As a membership plugin, it can also help you monetize your content through subscriptions and paywalls. You can also easily build a community around your niche, leading to recurring revenue and more trust from your audience.

6. Password Protect your Content

If you feel content locking is not suitable for you, you can try password protection.

Password protection is another effective way to protect your content from being duplicated, as it requires readers to register on your site before accessing it. This means that only people who have signed up for your site will have access to all the content on your site.

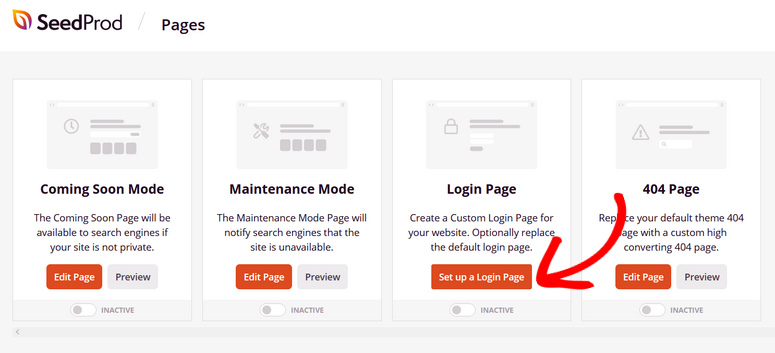

To help you password protect youer content you can try, SeedProd, the best password and login page builder.

It comes with over 300 templates which include many page templates that can help you password-protect your site. You can then customize these templates further with a simple drag and drop interface to create something truly special for your site. You can change the page colors and also the font, type, size, and color.

There are two main ways you can password-protect your site with SeedProd.

The first is by building a login page for your visitors. To do this, you can choose one of the many login page templates from SeedProd and customize it. You can also add prebuilt page elements, such as headers, footers, and FAQs, to communicate better with your audience.

The second method you can use is using the coming soon page. This will allow you to lock the entire site if it is under construction or if you want to give access to only members. It is also a great way to ensure you protect your entire site with a password.

SeedProd uses Google reCAPTCHA which ensures that your site is not spammed or attacked, adding another layer of protection.

But remember, SeedProd is not just designed to help you create login pages. It is also the best plugin to help you create any type of page and even a full site.

Another plugin you can use to create a login page to protect your content with a password is WPForms.

WPForms comes with 1200+ form templates which can help you build a form in minutes. It offers a User Login Form template that you can further customize with its drag and drop form builder.

You can also use MemberPress to password-protect your content without adding any code.

This method may not be suitable for everyone, as it can discourage people from visiting your site. But, password protection could be helpful if you offer premium content or educational material through memberships with a plugin like MemberPress.

Password protection is also a great way for creatives to monetize their content through membership sites and ensure only people interested in what they are offering can access it.

7. Geo-Lock your Content

Geo-locking your content is a reliable way to ensure only people from a certain location access your content.

By having a smaller group of people access your content, it is less likely to be stolen, and if it is, you can quickly spot it. This can help you use your resources better on a narrow audience, helping you get better conversions.

The best way to geo-lock your content is through Sucuri.

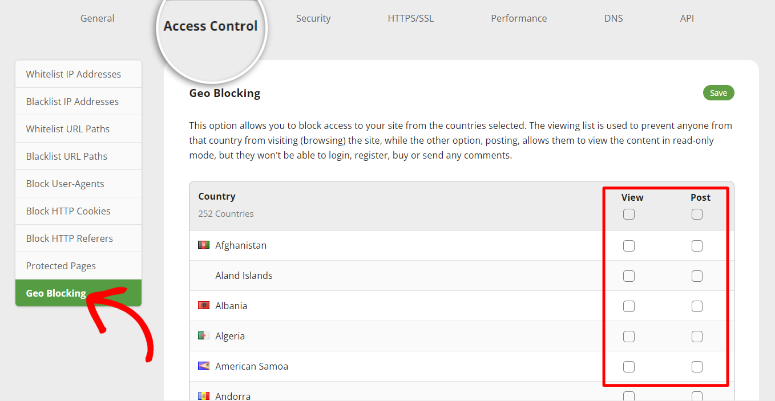

Sucuri is a cloud-based firewall tool that allows you to geo-lock your content by country. It uses a simple check-box system that lets you do two things.

You can either block people from the country from viewing your content, which means they cannot browse your site. Or posting, which blocks them from registering, buying, posting, or any other interactions with your site, but they can browse it.

Sucuri’s way of geo-locking content gives you flexibility on how you can protect your content and is incredibly easy to set up. For more options, check out this article on the best geotargeting WordPress plugins.

8. Reach Out to DMCA

Now that you have taken proactive measures to secure your content, you can take legal steps to protect it further.

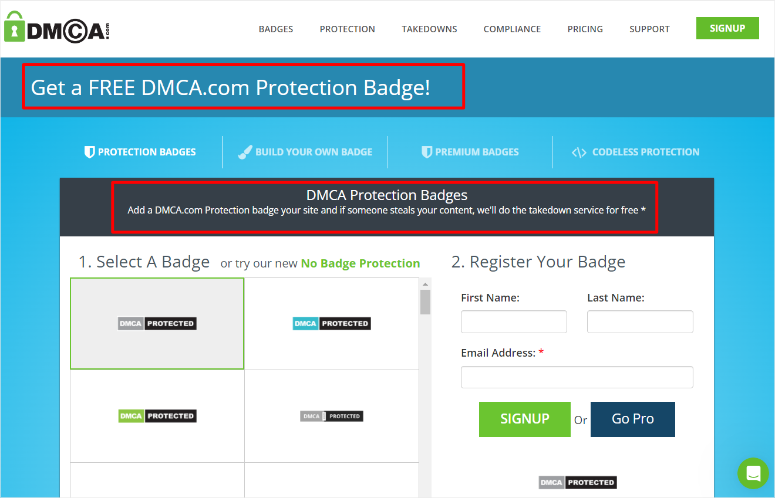

DMCA (Digital Millennium Copyright Act) is a body that can help you protect your intellectual property by helping you take down content copied from your site.

To help you do this, DMCA offers a free badge you can place on your site to scare off anyone who intends to steal your content. They also use content scanning tools to find any places where your content has been copied and will help you get it down.

In fact, the DMCA states that if anyone steals content protected by their budget, they will do a takedown at no charge.

To add to this, you can also watermark your images with the DMCA logo, giving you further copy protection.

We hope you enjoyed learning about how to protect your content from duplication. If you have more questions, check out the FAQs below.

FAQs: Protecting Your WordPress Site From Duplicate Content

How does duplicate content affect SEO?

Duplicate content can harm SEO by confusing search engines on which version to rank, leading to lower visibility. It can dilute the page’s authority and impact user experience.

Can AIOSEO reduce meta content duplication?

Yes, AIOSEO can help reduce meta content duplication by providing tools to create unique meta descriptions for each page like dynamic tags. It also offers auto-generated descriptions for your posts and pages to help generate unique and relevant metadata automatically.

How do I know if my URL is Canonical?

AIOSEO enables you to set canonical URLs, indicating the preferred version of a page. To check, go to the AIOSEO “Settings,” navigate to the “Advanced” tab, and verify the Canonical URL settings for each page.

What happens if I get a DMCA notice?

If you receive a DMCA notice, it means someone claims you copied content from them or did not follow their copyright rules. It is important to take this matter seriously. Remove or address the disputed content, and respond to the notice. If you fail to comply, you may end up in a legal dispute, which could lead to you paying fines and even giving a settlement amount. Your website’s reputation and search engine rankings could also suffer.

That’s it! Now that you understand the different ways you can protect your content, check out this AIOSEO review. Learn what else this SEO plugin can do for you other than helping you avoid content duplication like adding internal links to your site.

To add to that, here are additional articles you may be interested in reading.

- How to Make a Quiz in WordPress to Boost Engagement

- How to Embed Instagram Feed in WordPress (5 Easy Steps)

- 15 Best Content Marketing Tools and Plugins for WordPress

The first two articles will teach you how you can diversify your content and improve engagement. Such types of content can be more difficult to steal or duplicate. The last article will show you how to market your content better.

Excellent informative post. Thanks. I copied it to my computer but only to read it more thoroughly when I start tackling this list.