Without a doubt, Search Engine Optimization (SEO) is the cheapest yet most targeted way to drive traffic to your site. With SEO, you can help your target users discover your content at the right time when they’re looking for it.

Contrary to popular belief, WordPress SEO isn’t confusing or tough. With the right SEO tools in hand, it’s totally possible for you to optimize your site for search engines regardless of your skillset.

In this article, we’ll explain the basics of WordPress SEO, how to implement onsite SEO and ultimately master the SEO game.

What Is SEO & Why Is It Important?

SEO or Search Engine Optimization is a technique through which you optimize your web pages in order to drive free organic traffic from search engines like Google.

Basically your goal is to follow WordPress SEO best practices on your site and help search engines easily crawl and index your site for the right keywords. This way you can potentially help users discover your content when they’re looking for it by searching with the keywords that you’re targeting.

How Does Search Engines Work – Crawling, Indexing and Ranking

Search engines like Google, use advanced algorithms to understand and rank web pages appropriately in search results.

However, in layman’s terms, search engines basically have 3 functions.

- Crawling: In this process, it crawls thousands of web pages to discover relevant content. This is the first step of having a search engine recognize your page and show it in search results

- Indexing: Store and organize the content found during the crawling process. When a user looks for information, search engines return the results from its index.

- Ranking: It’s the process of ordering the content based on quality and relevance. That means if your page is ranked #1 for a particular query, then it will be shown first for that search query.

WordPress SEO Best Practices for Beginners

WordPress is one of the most SEO optimized website builder. But that doesn’t mean your content will be automatically crawled, indexed and ranked on Google just because you were using WordPress.

Fortunately, with WordPress, it’s easy to follow SEO best practices. Let’s take a look at some of the best WordPress SEO best practices.

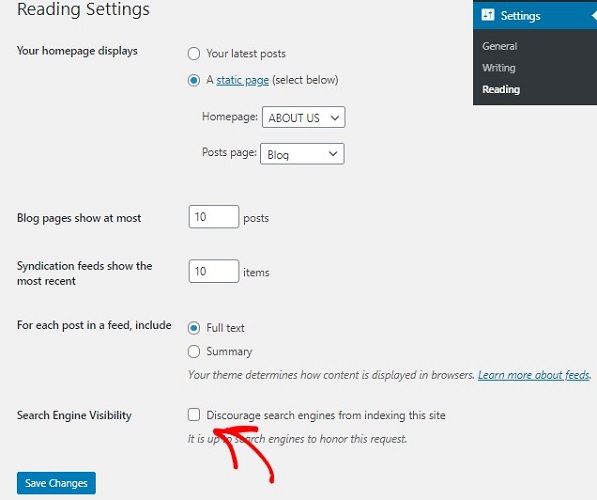

1. Enable Your Website Visibility Settings

The first thing you need to do is to ensure that your website isn’t hidden from search engines. WordPress comes with a built-in option to hide your website from search engines. This feature is helpful to you if your website is not ready to be crawled, indexed and you need more time to work on it.

While setting up the site, sometimes, users check off this option and then forget to enable visibility settings.

Log into your WordPress dashboard and go to Settings » Reading to see an option called Search Engine Visibility. Just make sure that this option is unchecked.

Once you do that hit the save changes button at the bottom so you don’t lose your settings.

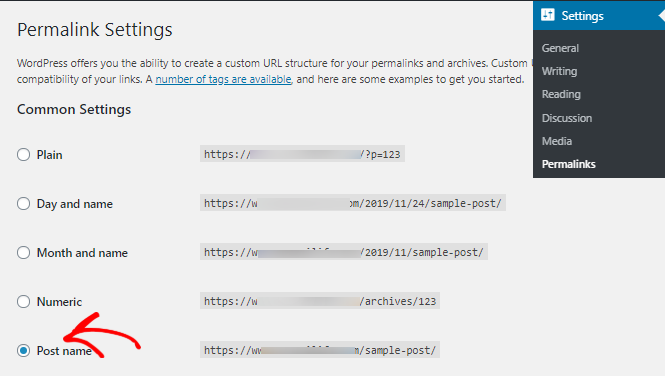

2. Use an SEO-friendly URL Structure

The next thing you need to do is to make your URLs SEO-friendly. SEO friendly URLs itself reveal the context of your content to both users and search engines.

An example for SEO friendly URL – https://www.isitwp.com/best-wordpress-seo-plugins/

Non-SEO friendly URL example – https://www.isitwp.com/?p=20198.

SEO-friendly URLs are one of the ranking factors, meaning they enhance the possibility of your website getting higher rankings in search results. The best way to make your URLs search engine friendly is by including your keywords in them.

By default, WordPress lets you set your permalink structure by going to Settings » Permalinks.

Let’s choose Post Name option. Once done, don’t forget to save your changes. This step is recommended only to new users. Keep in mind that it’s in your best interest not to change your current permalink structure, just so you don’t lose organic traffic and rankings.

3. Add an XML Sitemap to WordPress

An XML sitemap enables you to inform search engines about URLs on your website that are available for crawling. It plays a massive role in letting Google discover your web pages and posts.

The easiest way to create a sitemap to your site is by using the All in One SEO plugin. All in One SEO (AIOSEO) is an all-in-one SEO plugin that takes care of every little thing to ensure that your site is optimized for search engines.

Once you install and activate the plugin on your site, AIOSEO automatically creates a sitemap for you.

You can get all of the basic SEO features you need with the All in One SEO free plugin. But, AIOSEO also has a pro version that comes with more powerful features.

To know more, read our detailed guide on creating an XML sitemap.

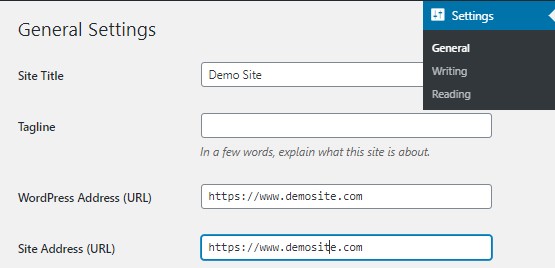

4. WWW Vs. HTTPS

You must have noticed that there are some websites that use www at the beginning of their web address while others use http. Search engines consider these two as two different web addresses. So to avoid confusion you clearly need to know which one to use for your website.

Again, WordPress lets you prefer which website address you want to choose. You can do that by going to Settings » General on your WordPress dashboard. You can then add your preferred URL in both the WordPress Address and Site Address fields.

5. Add Site to Google Search Console

Google Search Console helps you track your website’s performance on Google. It offers all important data related to your website’s visibility and performance, like keywords, impressions, clicks, CTR, and more. It also helps find indexing status, errors, so you can fix them in order to drive more organic traffic.

If it spots any form of error on your site, you’ll promptly receive a notification, so you can take action right away.

Here’s how you can add your site to Google search console.

6. On-page SEO

On-page SEO is a technique in which you optimize individual pages of your site, in order to have a better search ranking, more visibility and traffic. This involves working on your content, images and even the HTML source code.

You can check out these SEO audit tools that help you monitor and analyze your site’s performance.

A few things you need to keep in mind while doing your on-page SEO are:

- Content Strategy

- Image Optimization

- Optimizing Your Title

- Adding Categories and Tags

- Adding meta descriptions

- Interlinking

Let’s look at each of these points in detail.

7. Content Strategy

To know what questions your readers might have, you need to do keyword research as part of your content planning. This also helps you figure out which keyword has high traffic volume, competition, buying intent and more.

Start by using a keyword research tool and have in-depth keyword data, competition analysis, keyword position tracking, and tons of other useful features.

To know more, check out how to do keyword research.

It also helps you understand your topic thoroughly before you start drafting your post. Only then you’ll be able to come up with a comprehensive post that answers your reader’s questions.

Make your post easy to read because readability is one of the key search engine ranking factors.

8. Image Optimization

Images play a big role in the success of your post. Without images, your post will appear dull and boring. Images can make your post engaging and interesting to read.

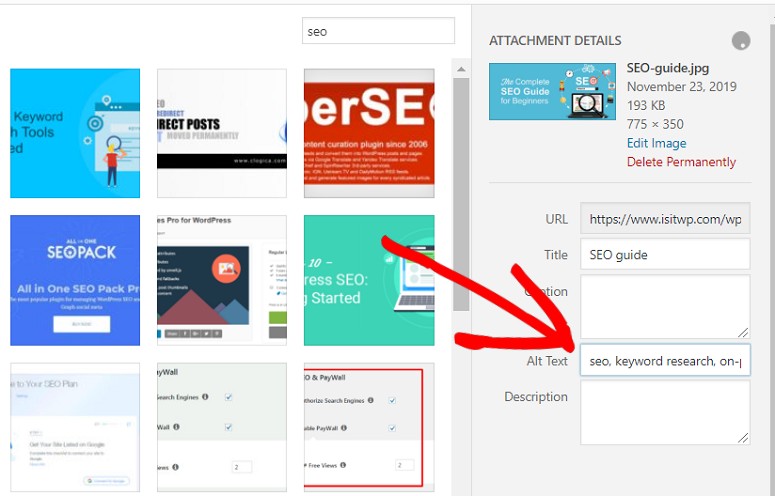

But simply adding images to your post isn’t enough. You should make it easier for search engines to understand what these images are all about. You can do that by adding alt text to your images. Alt texts are nothing but words describing the image. Use one or two words to describe what these images are.

9. Adding Categories and Tags

Categories and tags help you organize your website content. This helps your readers and search engines to find your content easily on your website.

Categories are used to organize your content into major topics discussed on your blog. Tags, on the other hand, are the topics discussed in an individual blog post.

Still confusing? Then think of this way… A blog post filed under the food category can have tags like salad, breakfast, pancakes, etc.

So make sure you add proper categories and tags to your content before you publish them. Learn more about adding categories and tags to your content.

10. Optimize Your Title

Your title is the first thing that a visitor will notice in your article. Use IsItWP’s headline analyzer to come up with titles that work.

You can also try using power words in your title. Power words can trigger an emotional response in your users and attract them to click on your title.

11. Adding Meta Description

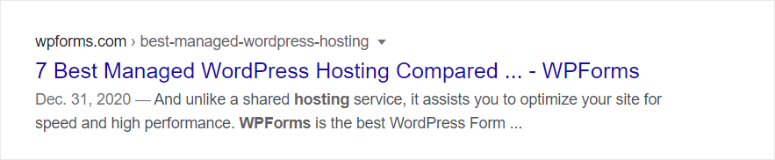

Meta Description is a brief snippet or HTML tag that contains up to 155 characters summarizing your content. Adding meta description to your posts and pages make it easier for your users and search engines to understand your post better.

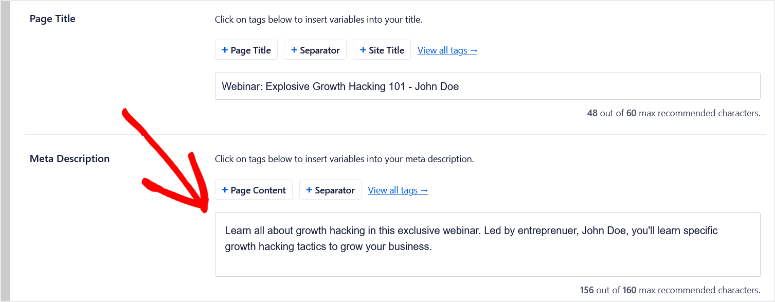

If you’re using All in One SEO, you can easily add your meta description by scrolling down your page. You can enter it manually or use the tags with different variables.

Make sure you don’t exceed 160 characters. You should also ensure that you include your keywords at least once in your meta description.

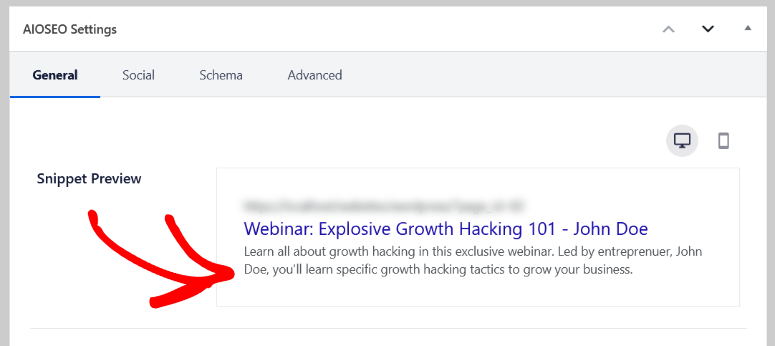

All in One SEO will also give you a snippet preview so you can see how your meta description will look in search results.

12. Internal Linking

Internal linking is a great way of building a contextual relationship between your older and new posts. Once you have enough posts on your blog, you can start internal linking them. This way you can redirect your visitors to the older post and generate some traffic for them.

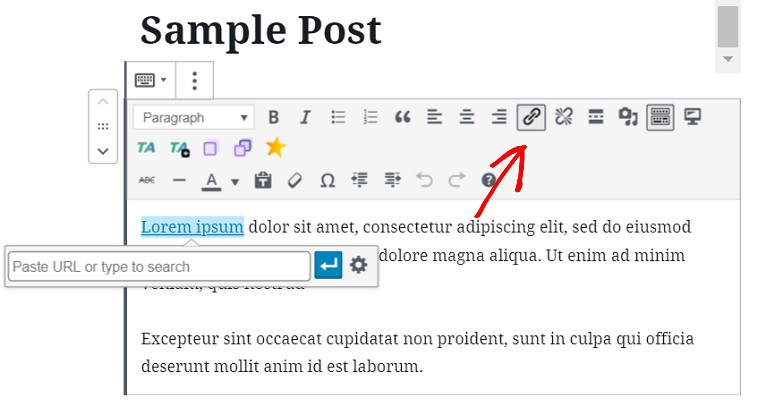

In WordPress adding internal links is easy. Just select the text you want to link. A new window will pop up. Click on the link button and paste the URL of the post you want to link to. Click on the Save button to save your changes.

By making internal linking a habit you can ensure that your old posts continue to get some traffic.

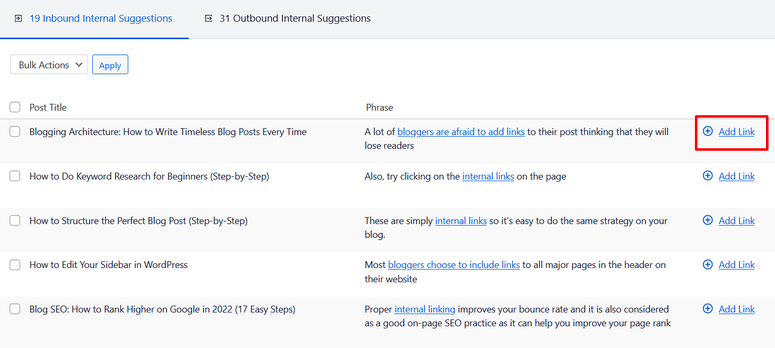

To make internal linking even easier, you can use All in One SEO’s Link Assistant addon. The Link Assistant will automatically generate a link report for your site and give you relevant linking suggestions.

You can add links to your content in 1-click without needing to edit each post manually. It’s the fastest way to make sure that you’re creating internal links for new and old posts.

13. Nofollow External links

Building backlinks is the backbone of SEO. When you link to another website, you’re actually passing some of your site’s SEO score to that link and saying this link is trustworthy. This SEO score is called link juice.

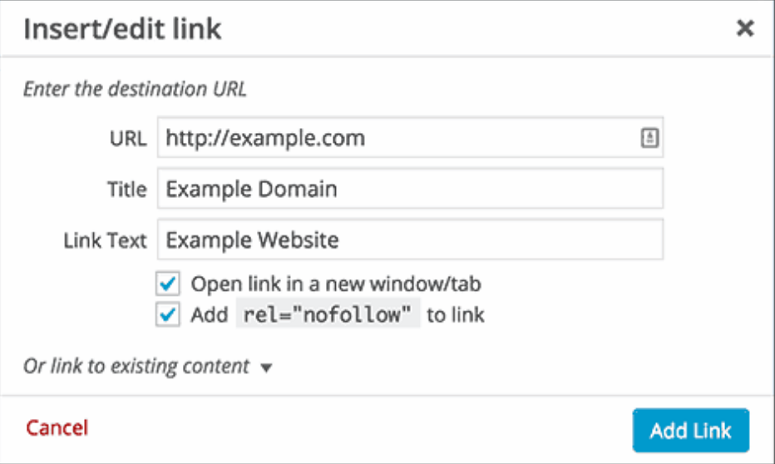

If you’re linking to a low-quality website, it’s in your best interest to add the nofollow attribute to your external links. That way you don’t pass link juice.

If you’re using the text editor in WordPress here’s what a normal link would look like –

<a href="http://demosite.com">Demo Website</a>.

A link with the nofollow attribute.

<a href="http://demosite.com" rel="nofollow">Demo Website</a>.

14. Link Building for Authority

Building backlinks to your site from established websites in your niche is a surefire method to boost your rankings.

A few ways to build backlinks are:

- Write great content that others want to link

- Email Outreach

- Guest blogging

Learn more about link building here.

Measuring SEO Success

Although it’s difficult to completely adhere to the changing SEO dynamics, the above points are some of the basic steps to follow to get your SEO game started. And once you’re on it, you should also know how to measure your SEO success.

To measure your SEO success, you need to know how much organic traffic you’re gaining. You can see this data in your Google Analytics dashboard.

Check out how to add Google Analytics to WordPress.

So that’s it. We hope this article helps you secure your position in the top search results. While working on your site’s SEO, you might also want to use some robust SEO tools that help you grow your traffic faster.

FAQs on WordPress SEO

What are the essential SEO elements for WordPress?

Key SEO elements include optimizing your content, meta tags, images, site speed, mobile-friendliness, and ensuring a user-friendly experience.

What is a WordPress SEO plugin, and which one should I use?

A WordPress SEO plugin is a tool that helps you optimize your website for search engines. All in One SEO is the top SEO plugin for WordPress. It has everything you need and acts as an all-in-one solution to optimize your site.

How can I improve my website’s content for SEO in WordPress?

- Use relevant keywords

- Write high-quality, engaging content

- Optimize headings and meta descriptions

- Focus on readability and user experience

Is mobile SEO important for WordPress sites?

Yes, mobile SEO is crucial. Google prioritizes mobile-friendly websites in its search rankings. Ensure your WordPress site is responsive and provides a good user experience on mobile devices.

What are backlinks, and how can I build them for my WordPress site?

Backlinks are links from other websites to your site. You can build them through guest posting, creating high-quality content, networking with other website owners, and using social media.

Are there any SEO practices to avoid in WordPress?

Yes, here’s what not to do in SEO:

- Keyword stuffing

- Using duplicate content

- Ignoring image optimization

- Ignoring mobile optimization

- Not updating your content regularly

- Any black hat SEO technique

How do I monitor and measure the success of my WordPress SEO efforts?

You can use tools like MonsterInsights, Google Analytics and Google Search Console to monitor traffic, rankings, and user behavior on your WordPress site.

We also have a full review of the All in One SEO tool that we hope you’ll find helpful. It covers everything you can do with this tool to boost your site’s SEO with just the clicks of your mouse! No technical coding needed.

nice informative post. Thanks for sharing

I enjoyed reading this concise and well-explained article about SEO. I’d like to write one similar on my site and begin with image optimization because I didn’t think it mattered. Thank you!!